Read Data From a Website in Java Concurrently

Java - Concurrent Collections

[Last Updated: Jul 2, 2017]

This is a quick walk-through tutorial of Java Concurrent collections.

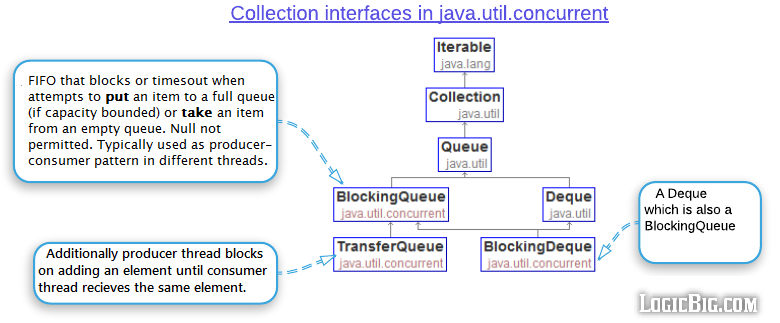

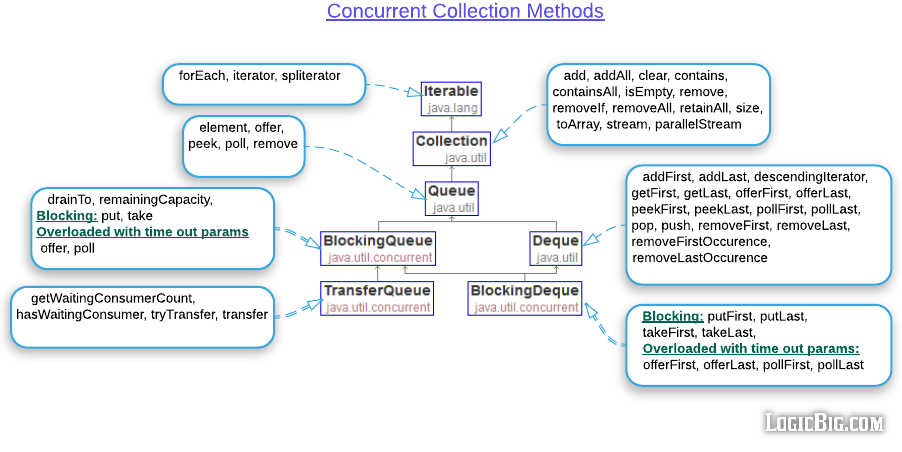

Interfaces

java.util.concurrency package extends Queue interface to define new ADTs:

Operations

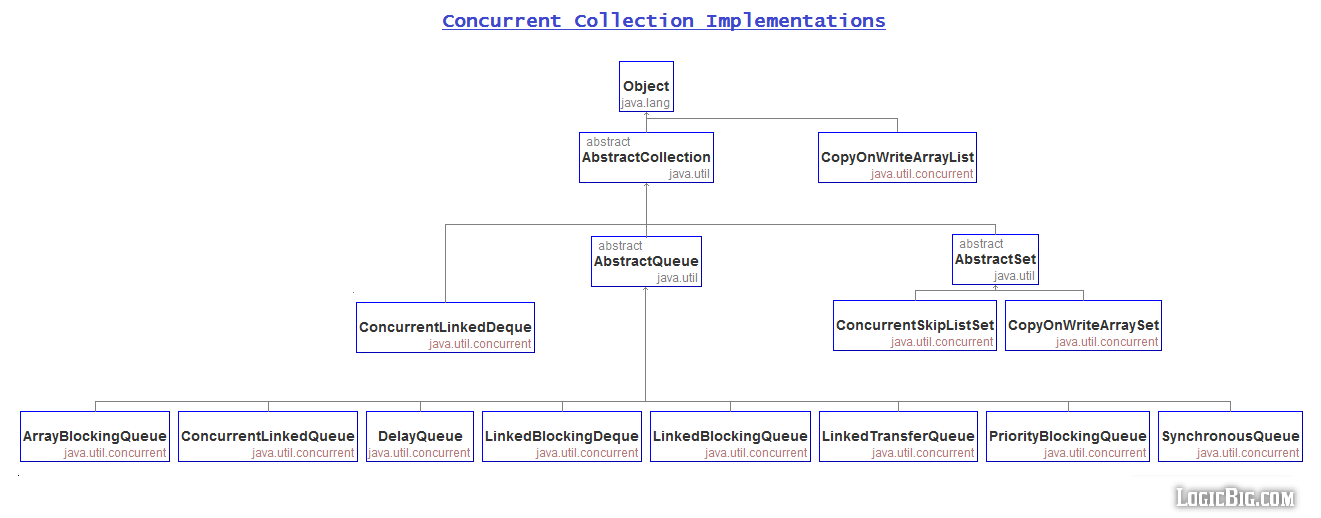

Implementations

All implementations of collections in java.util.concurrent packet are thread safe. These implementations use fine grained fine grained locking/synchronization for thread safety.

Impl | ADT | Information Structure | Performance |

|---|---|---|---|

ArrayBlockingQueue | BlockingQueue | Assortment of objects. Bounded. Supports fairness policy. If fairness=true, threads are guaranteed to access in FIFO order. A archetype bounded buffer. | offering, peek, poll, size:O(i) put, take: O(i) if ignore blocking fourth dimension. Fairness generally decreases throughput only avoids producer/consumer starvation. |

LinkedBlockingQueue | BlockingQueue | Linked structure. optionally-bounded | offer, peek, poll, size:O(1) put, take uses separate locks, higher throughput. Even more throughput if unbounded (no spring blocking, hence more than data passing through). It has less anticipated performance because of dynamic retentiveness allocation and fragmentation (hold locks longer). |

ConcurrentLinkedQueue | Queue | Linked structure. Unbounded. Not-blocking but withal thread-safe. Null not permitted. Advisable pick over LinkedBlockingQueue when many threads access the collection. | The methods employs an efficient "wait-gratuitous" algorithm. offering, peek, poll:O(1) size: O(n) because of the asynchronous nature. size method is non very useful in concurrent applications: if elements are added or removed during execution of this method, the returned result may be inaccurate. Iterators are weakly consistent (don't throw ConcurrentModificationException and don't show the changes consistently to other threads). |

DelayQueue | BlockingQueue | Internally, it uses a PriorityQueue instance which in turn uses Binary Heap data structure. Unbounded. Each element has to implement java.util.concurrent.Delayed interface. When the method Delayed.getDelayed(TimeUnit unit of measurement) returns a value less than or equal to zero, the parent chemical element becomes available in the queue for removing/polling, Regardless bachelor or non, the element is still an element of the queue eastward.g. size() includes information technology. It doesn't permit null. | offer, poll, remove() and add together: O(log n) remove(Object), contains(Object) O(n) peek, element, and size: O(1) |

PriorityBlockingQueue | BlockingQueue | Binary Heap. Unbounded Uses the same ordering rules as PriorityQueue i.eastward. Elements are ordered to their natural ordering or by a Comparator provided at construction fourth dimension. | offer, poll, remove() and add: O(log north) remove(Object), contains(Object) O(n) peek, element, and size: O(i) put: O(log n). Equally the queue is unbounded this method never blocks. take: O(log due north). This method blocks until an element becomes available. |

SynchronousQueue | BlockingQueue | No standard data construction. Applies rendezvous channels blueprint where one thread handoff data/info to another thread. Each insert functioning must wait for a respective remove performance past another thread, and vice versa. With this very unusual collection at that place are no elements stored (just a slice of info is transferred from 1 thread to another, one at a time). Bounded: not applicative | put, offer, take, poll, : O(1) Other methods has no consequence equally there's no formal collection information structure so poll() ever returns zip, isEmpty() e'er returns false, size() always return 0, iterator() always returns empty iterator etc. |

LinkedTransferQueue | TransferQueue | Linked data construction. Unbounded Like handoff concept as SynchronousQueue (above) only is a formal collection and has extra interface level support methods like tryTransfer, transfer etc. A useful transfer scenario: if the queue has elements in it already and transfer/tryTransfer called, information technology waits for the queue to empty including the new transferred element. | offer, peek, poll:O(1) size: O(n) All blocking methods subtract throughput. By and large LinkedTransferQueue outperforms SynchronousQueue by many factors. |

LinkedBlockingDeque | BlockingDeque | Doubly linked nodes. Optionally-bounded. Appropriate choice over LinkedBlockingDeque when many threads access the drove. | remove, removeFirstOccurrence, removeLastOccurrence, contains, iterator.remove() and the bulk operations: O(n) Other operations: O(1)(ignoring time spent blocking). |

ConcurrentLinkedDeque | Deque | Doubly linked nodes. Unbounded. Non-blocking but still thread-prophylactic. Doesn't let nothing. Appropriate choice over LinkedBlockingDeque when many threads admission the collection. | Index based get and remove methods: O(due north) size: O(n). Not reliable because of asynchronous operations All other methods: O(i) Iterators are weakly consistent They don't throw ConcurrentModificationException and don't bear witness the changes consistently to other threads. |

CopyOnWriteArrayList | List | Arrays of Objects . Thread-rubber. All mutative methods (add together, set, and so on) are implemented by making a fresh copy of the underlying assortment. | add, contains, remove(Due east e): O(n) get(Int alphabetize), adjacent: O(1) Lock is only used for write methods to increment throughput for reads. Write operation is costly because copying a new arrays every time. Not recommended if there are a lot of random writes. Iterators are very fast (no locks and thread interference). They never throw ConcurrentModificationException, neither are consistent with the writes fabricated after the cosmos of iterators. Element-changing operations on iterators (remove, set, and add) are not supported. These methods throw UnsupportedOperationException. |

CopyOnWriteArraySet | Set | Arrays of Objects(Internally uses CopyOnWriteArrayList case, fugitive indistinguishable elements past using CopyOnWriteArrayList.addIfAbsent). Thread-safe. | add together, contains, remove(E e): O(n) next: O(i) Iterator behavior is same as CopyOnWriteArrayList. |

ConcurrentSkipListSet | NavigableSet | Backed past ConcurrentSkipListMap (Skip list information structure), where elements are populated as keys of the map. Thread-safe. Insertion, removal, and access operations safely execute meantime by multiple threads. | contains, add, and remove operations and their variants: O(log n) side by side: O(one) size: O(n). Not reliable considering of asynchronous operations. Iterators and spliterators are weakly consistent. |

Important things

- The performance price of all in a higher place implementations is: locking and synchronization. Generally if there's only one thread accessing the drove, we should never use concurrent implementations over coffee.util non-synchronized collections.

- All Linked implementations of queues, are efficient equally compare to 'LinkedList' (also implements Deque) as there'south no searching or removing items past alphabetize.

- For a typical two threads producer-consumer 'Queue' scenarios, use blocking queues. If multiple threads are accessing then utilise one of ConcurrentXYZ Queue/Deque implementations.

- Above implementations are better choice over Collections.synchronziedXYX methods because those methods synchronize every method on a common lock ('this' monitor), restricting access to a single thread at a time. Above implementations use effectively-grained locking machinery called 'lock striping'.

- Sometimes we still want to choose Collections.synchronizedXYZ(..) but recall they event in less throughput. We should use them merely if we have depression concurrency, and simply in scenarios where nosotros want to be sure all changes are immediately visible to the other threads. If we use the iterators, we demand to synchronize them externally to avoid ConcurrentModificationException.

Choosing a Concurrent Collection

Read Data From a Website in Java Concurrently

Source: https://www.logicbig.com/tutorials/core-java-tutorial/java-collections/concurrent-collection-cheatsheet.html